Tapestry’s AI tools power groundbreaking new collaboration with PJM and Google

As electricity demand surges in the U.S. and around the world, developers are proposing more new energy projects than ever to meet the growing need. But the existing processes for reviewing and approving their applications to connect to the grid are having trouble keeping up with the unprecedented surge in forecasted electricity use—right when businesses and consumers need new energy the most.

At Tapestry, we believe AI has the potential to meaningfully improve how power system operators make decisions about the grid. With AI, we accelerate the reliable, affordable, and rapid connection of energy by strategically modernizing the tools operators use to manage and plan the electric grid.

While chatbots get most of the attention, AI encompasses a broad umbrella of distinct technologies that can help in countless different ways across the energy system. Tapestry is working on dozens of different use cases applying AI to the grid. Here, we would like to focus on just one of those to show how a specific form—agentic AI—can directly improve grid planning processes.

Agentic AI is built to achieve goals without constant supervision. Using agentic AI involves creating individual processes, called agents, that find information, analyze it, and make decisions over multiple steps.

Consider the example of planning a dinner out with friends: you may need to search for restaurants, check ratings, coordinate calendars, book the restaurant, and communicate the plans. Agentic AI breaks down problems in a similar way, with one agent for each step (e.g., a ratings-checker agent, a booking agent), all reporting back to a central orchestrator. To accomplish their task, these agents can use different tools, such as large language models (LLMs) and other machine learning (ML) models, or simple scripts, based on each agent’s own instruction set and specific context.

Agentic AI is particularly good at problems that are well-defined, but complex and information-dense; see recent applications in law and healthcare. These are tasks that a standard chat-based LLM will struggle with, because its limited context window (the amount of information an LLM can process at a time) and processing capacity make it liable to hallucinate or crash. By breaking a task into atomic units, each performed by an agent within narrowly constrained conditions, agentic AI can overcome these limitations.

For product teams, training an agentic AI system requires a combination of subject-matter knowledge, user empathy, engineering, research, and testing. The design process typically includes:

Power systems engineers encounter many challenges in operating and planning the grid. Much of their work involves the sort of complex, multi-step, nuanced decisions with which today’s AI agents are well-suited to help. To understand those challenges, we are collaborating with grid operators around the world, including PJM Interconnection (North America’s largest Regional Transmission Organization, or RTO). Our teams are working together to address some of the most critical challenges with the support of agentic AI.

The interconnection challenge

Interconnection of new generation is an industry-wide challenge that warrants an agentic approach. The process is fairly universal: if a developer wants to connect an energy-generating project to the grid, it must submit an application describing its plans in great detail. Then, the grid operator—in coordination with the local electricity service provider (typically the local utility)—reviews the provided data and performs a technical analysis of the impacts the proposed generation may have on the transmission system, and determines any upgrades needed to safely interconnect.

Today, this interconnection process is a critical bottleneck. In the U.S., electricity demand is forecast to increase five times faster between 2025 and 2035 than in the previous decade. The U.S. will need every available gigawatt of capacity to meet this growing demand over the next few years. Speeding up the process of moving planned generation resources through the interconnection process and into operation will be critical to support the nation’s leading role in the global digital economy.

Currently, the interconnection study process (for example, for developers in PJM’s territory) may take close to two years due to the complexity of integrating resources into the grid; coordination between the RTO, developers, and transmission owners; and the time-consuming, labor-intensive processes for reviewing lengthy and detailed interconnection applications.

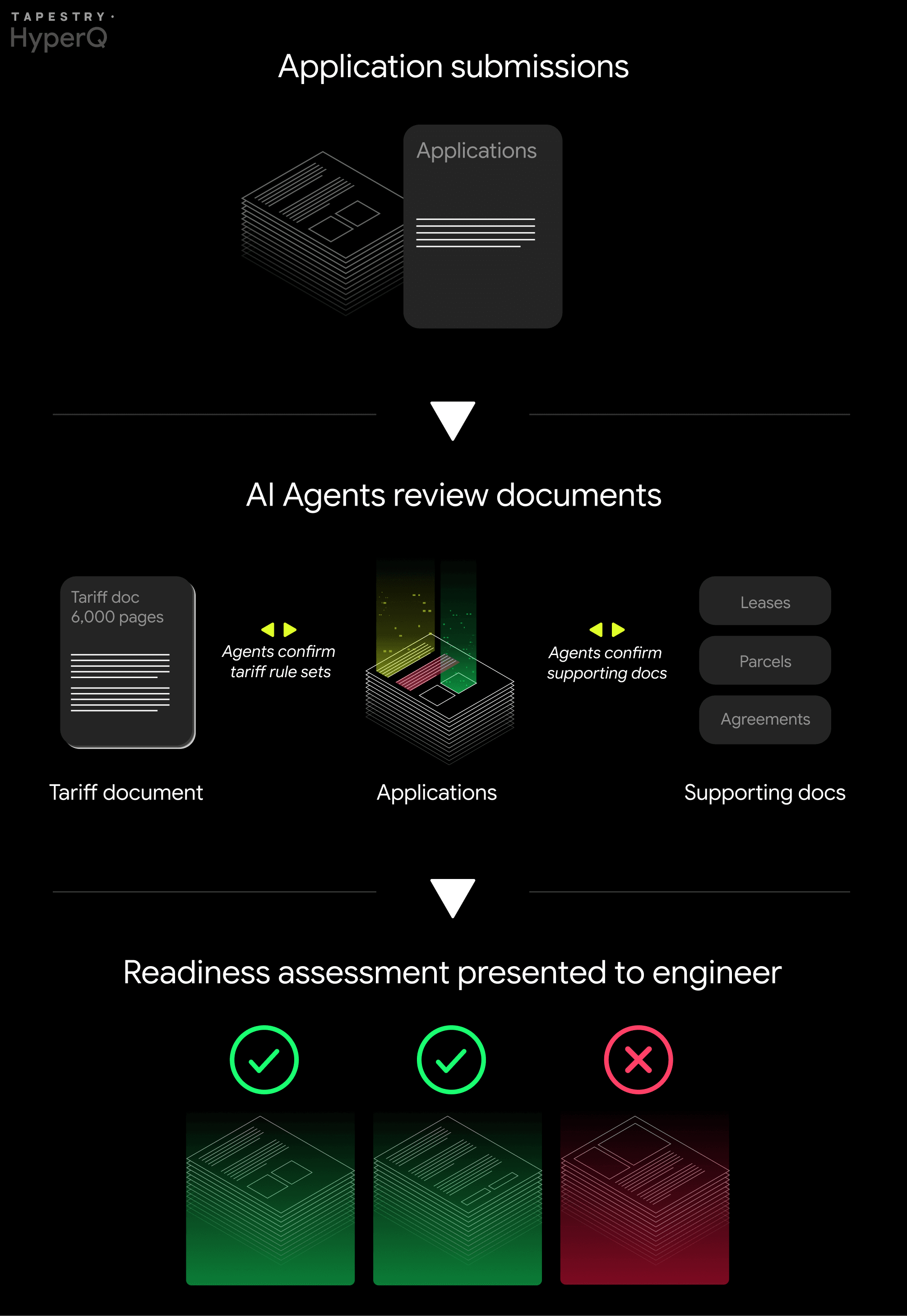

For many grid operators, initial applications arrive in batches that can reach many hundreds of submissions. Once the application deadline arrives, the clock starts ticking: trained engineers have a set number of business days to review all applications and respond to developers, noting any problems in the applications. The time involved in reviewing and approving applications pulls engineers away from other analytical activities necessary to ensure the transmission system remains reliable.

Engineers reviewing applications do so by consulting manuals and tariffs—complex rules that govern the entire interconnection process—and manually searching across the entire application for direct supporting evidence (for example, exact passages in legal documents) that a proposed project complies with all tariff requirements. In the U.S., every RTO and Independent System Operator (ISO) is required to publish a tariff document, which is often hundreds of pages long; when you include all of the attachments, schedules and manuals that accompany the core tariff document, the total volume can easily span thousands of pages. And that’s just the rulebook.

Reconciling these tariffs with the information in new generation applications is a highly challenging process, in part because the paperwork submitted for a single application can range in size from fewer than ten pages to several thousand—with an average of close to 1,000 pages each when factoring in the accompanying appendices, metadata and supporting technical and legal documentation. The work is also repetitive: applications may be reviewed in full at least three times (once for each phase of the interconnection process), with additional review required as developers correct deficiencies or discrepancies, or introduce new information.

The sheer volume of this information, combined with the fact that evaluation can be broken into small steps defined by clear procedural rules, makes reviewing interconnection applications an excellent candidate for agentic AI assistance.

Spotlight: Application readiness review

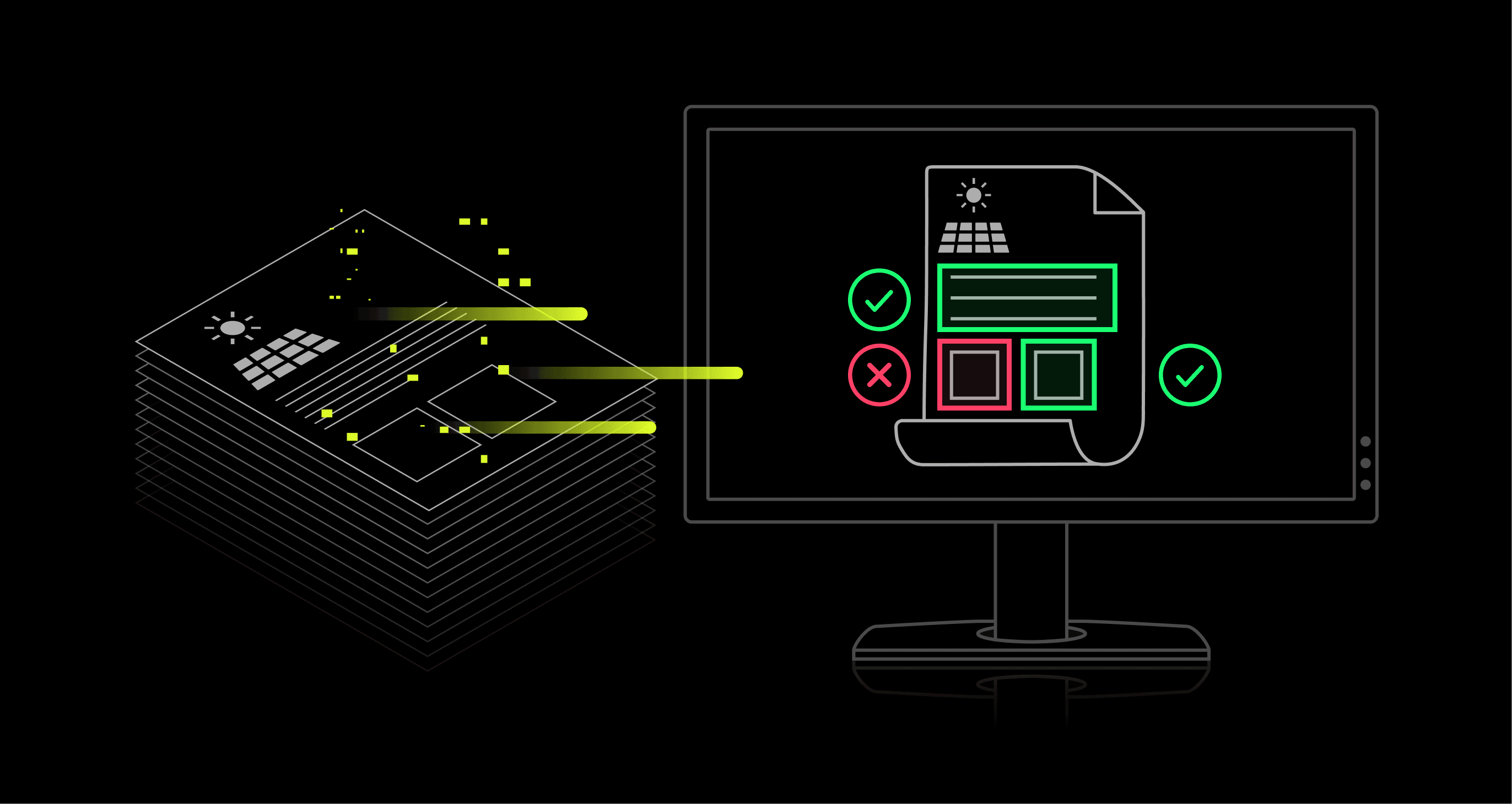

Application review starts with verifying that the many data inputs submitted in an interconnection application are complete and meet the tariff requirements.

This readiness review requires extracting, checking, and verifying all supporting evidence in the application. While the exact number depends on the grid operator’s rules, interconnection applications may require scrutiny of hundreds of input parameters to understand if a project meets the necessary requirements.

As one of the initial steps in the interconnection process, this detailed review prevents low-quality projects from taking up valuable resources dedicated to processing new service requests. Early flagging of unverified, conflicting, or incorrect information also reduces the number of projects that add to grid upgrade cost estimates but are unlikely to actually be built.

Let's look at a specific example of application review: confirming that an energy developer submitting an application legally controls the land where they would like to construct a new generation plant. Verifying this information ensures that the site is both legally within the developer’s control (land rights) and physically suitable (site characteristics, including whether a developer has rights over an area large enough to build the proposed power plant and any necessary infrastructure). These requirements ensure that only truly ready projects can enter and proceed through the queue.

While the exact review varies by RTO, data verification is common across many parts of the energy system; it will likely be familiar to any engineer who has manually (and often painstakingly) reviewed and cross-checked between multiple information-dense documents and files.

HyperQ is a grid interconnection platform that accelerates the processing of applications from new energy sources looking to connect to the grid. It helps system planners and developers expedite reviews for new energy projects as well as verify data and grid models.

HyperQ analyzes interconnection applications using agentic AI and machine learning, and organizes them within a simple, centralized web interface. It processes an entire batch of applications at once, individually analyzing each one and offering an easy-to-understand evaluation of an application’s quality. This assessment helps engineers home in on the most critical information—and assess whether the application might contain deficiencies or missing elements.

For each application, HyperQ creates a detailed Application Report, a dashboard offering a natural-language explanation of how it reached its assessment. HyperQ pairs this explanation with citations highlighting which specific parts of the application (such as parcel references and key legal clauses) informed its readiness assessment.

HyperQ simplifies the expert reviewer’s work by integrating applicants’ property-related documents, HyperQ-generated reports, and other application materials in one place, with the relevant documents organized into clusters. This clustering approach addresses a significant challenge in confirming land rights: many legal agreements have multiple amendments, and a decision on whether a parcel satisfies the constraints needs to take into account this entire set of interrelated documents. Clustering avoids the painstaking cross-referencing and potential mistakes that come from managing this reconciliation process manually.

HyperQ’s process gives engineers the tools they need to keep up with high volumes of applications, providing full visibility into its reasoning along with the information underlying its assessments. Surfacing this information helps engineers exercise their expert judgment to make decisions more quickly; this expert input, in turn, helps improve HyperQ's assessments over time.

Under the hood

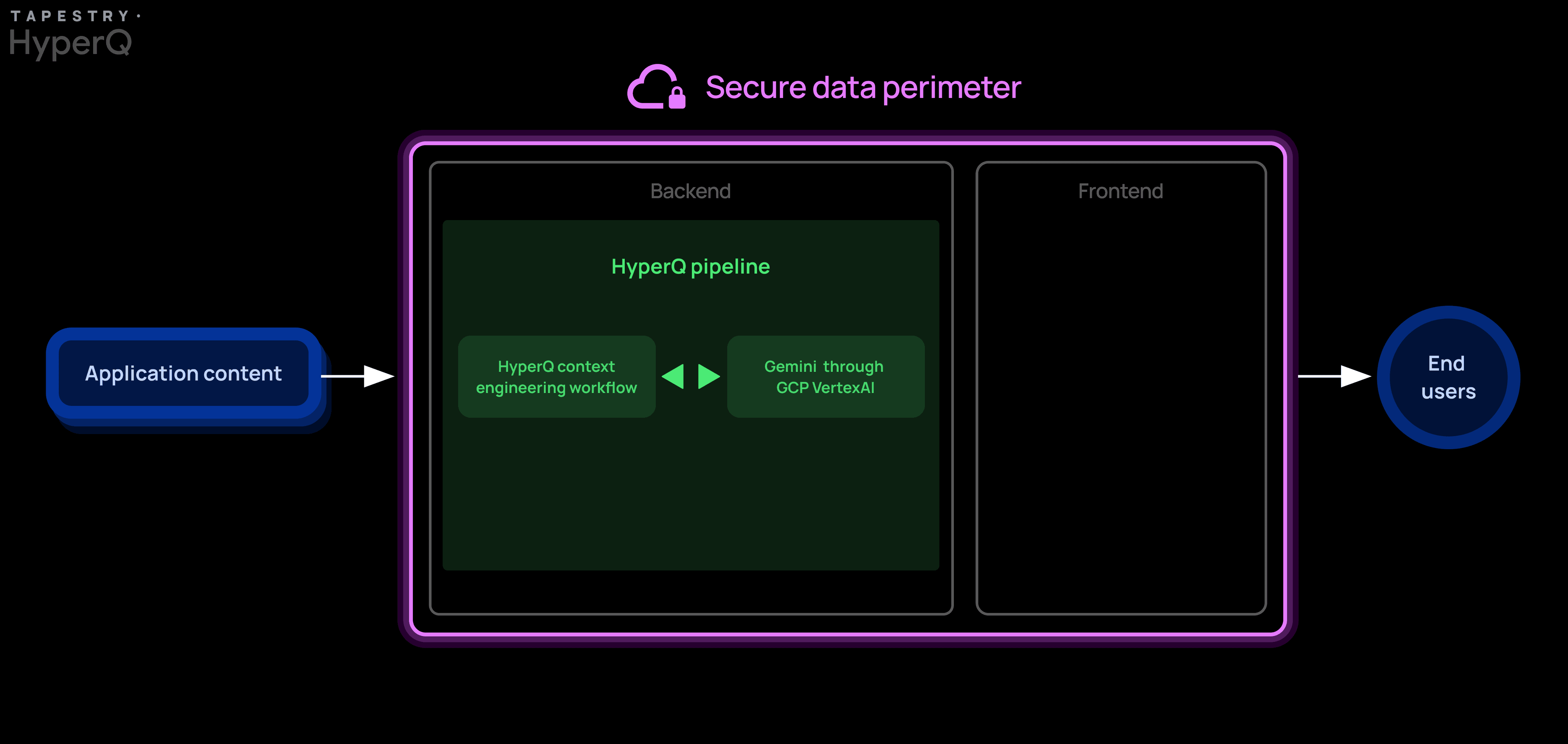

HyperQ uses agentic AI as a backend service, rather than a chatbot interface; this approach employs AI as a tool for batch analysis, versus one that lets users ask conversational questions.

To build HyperQ, we distilled extensive existing domain knowledge from PJM—including its tariff and user manuals, and how employees rated previous interconnection applications—into a detailed instruction set. This approach allowed us to break the problem into structured subtasks, and use agentic AI to parse out these steps to agents that leverage Google’s multimodal LLM, Gemini.

Gemini excels at reasoning and interpretation; HyperQ wraps it in a guided agentic AI workflow. Gemini serves as an effective AI-powered assistant for foundational tasks like advanced document ingestion and multimodal analysis of complex PDF elements (signatures, figures, and diagrams). HyperQ’s guidance is based on direct subject matter expertise, informing how the multimodal LLM finds, interprets, and synthesizes relevant information dispersed across multiple documents and thousands of pages.

These tasks are split among several agents. Some are responsible for recognizing and extracting specific pieces of information from the interconnection application (for example, parcel names or acreage). Other agents are tasked with more nuanced jobs, such as assessing application materials’ compliance with tariff rules, or understanding relationships between linked documents. The architecture has multiple agents operating, some in sequence and some simultaneously, with an orchestration layer providing coordination. It validates outputs and stitches them together into easily understood elements like HyperQ’s Application Report. Our design uses Google’s Gemini as the agent, but relies on carefully orchestrating and designing the various tasks that we want the agents to perform.

HyperQ would not have been possible without a pair of AI breakthroughs within the past two years: the introduction of reasoning models in late 2024 and the launch of native multimodality in late 2023. Unlike earlier iterations of AI models, modern reasoning models can “reflect” on their own outputs and analysis. This self-correction capability is critical for legal determinations where accuracy and logical consistency are non-negotiable. Native multimodality enables models like Gemini to go beyond reading printed text by performing holistic analysis that correlates legal clauses with visual evidence—such as validating wet signatures—which is required for a complete legal review.

HyperQ builds on those advances. We use several techniques to make the most of the current strengths of agentic AI, while accounting for its limitations, including:

Notably, application documents are developers’ proprietary information and subject to rigorous security requirements. To protect that data, HyperQ is surrounded by a robust U.S.-based secure data perimeter using VPC Service Controls. All data processing and storage happens (and remains) within this perimeter, ensuring access is strictly controlled to authorized personnel only and meets stringent industry security and regulatory requirements.

Benefits of this approach

Application review has traditionally been a time-consuming, highly manual step in the interconnection process. Engineers work long hours cross-checking PDFs, drawings, and other documents to spot the bit of evidence that will satisfy a requirement. They may also need to supplement their own work by bringing on lawyers to assist with the task, adding more time, effort, coordination, and costs.

By providing both AI-driven analysis and a centralized web interface, HyperQ directs human reviewers toward salient information within each application. The workflow is optimized to help engineers process applications more efficiently and further speed the interconnection process to help meet quickly growing demand.

As we continue its development, we intend for HyperQ to save engineers substantial amounts of time and manual work. HyperQ can unlock crucial review bandwidth by freeing them from combing through thousands of pages looking for evidence. It’s our hope that the tool may also help establish a fully consistent standard for application readiness across reviewers, without requiring them to undergo intensive training or cross-check with others.

Most importantly, the goal is to help developers receive faster, clearer assessments of project readiness, so the most ready projects move quickly, while projects that are not ready get useful feedback to help prepare for re-evaluation.

Summary

This is a snapshot of one simple and immediate application of AI to an important part of the overall interconnection process. While it’s not a silver bullet, Tapestry believes AI has the potential to positively transform this and many other aspects of the energy industry.

Anyone working to build grid-connected projects, or decide which projects can safely connect to the grid, goes through some version of the interconnection process. While the details may differ, developers and operators in every jurisdiction have to manage rules, documents, and grid compatibility checks. The architectural foundation of HyperQ should extend to many variations of this problem.

HyperQ is just one example of the many different types of tools we’re developing to address challenges facing stakeholders across the energy industry, from a developer trying to define a high-value project or a utility maintaining its infrastructure, to a grid operator planning for the next decade while also orchestrating electrons for the next five minutes.

We look forward to sharing more about HyperQ and the other tools we’re developing alongside partners who understand and embrace the potential to address real-world challenges with new technology and an innovative mindset.

In the meantime, if you’re interested in joining our team and working at the intersection of cutting-edge applied machine learning and the power systems and energy industry, apply here.

Before joining Tapestry at X, The Moonshot Factory, Page held roles as a go-to-market and commercial leader and advisor at several start-ups, two of which attained unicorn status: Sunrun in rooftop solar energy in the U.S., and Konfio in financial services in Mexico. She was previously co-CEO and founder of Clarus Power, a venture-backed residential solar customer acquisition platform. She was recognized as one of the top 50 Climate Tech Operators in the 2021 Climate Draft and won Fin Earth’s 101 Women in Climate Award in 2024.

Before joining Tapestry at X, The Moonshot Factory, Andy served as President and CEO of PJM Interconnection, the largest power grid in North America and the largest electricity market in the world. Andy is internationally recognized as an expert in electricity market design and power system operation. He has extensive experience in power system engineering, transmission planning, applied mathematics, electricity market design, and implementation. Mr. Ott served as Co-chair of the Energy Transition Forum for 8 years, is an IEEE Fellow, and an Honorary Member of CIGRE.

When Aviva isn’t powering up our partnerships, you can find her amped up for a game of pickleball. She is also a fan of electrical grid puns.

When he's not helping build tomorrow's grid, Nikhil can be found cooking for family and friends, playing cricket, or figuring out which heavy metal songs make the best lullabies for his son.

Cat balances her passion for technology and leadership with a love for travel and cultural exploration.

Natalie's product management work has her seeking out solutions to speed up the interconnection queue, better manage grid models, and develop a Tapestry platform. She balances her enthusiasm for clean energy with a love of epic backpacking adventures.

Colin's professional mission is speeding up grid interconnection. His personal mission? Speeding down mountains on telemark skis and visiting every type of power plant.

Robin's career spans global power system software, operator training across continents, and advanced data science. Driven by curiosity, he explores the world and embraces new cultures.

Yihang designs user-friendly products. Her dog, Mili, designs user-friendly cuddles.